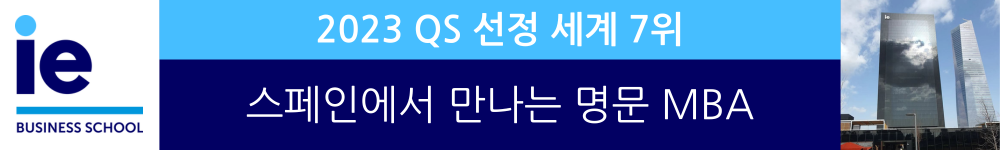

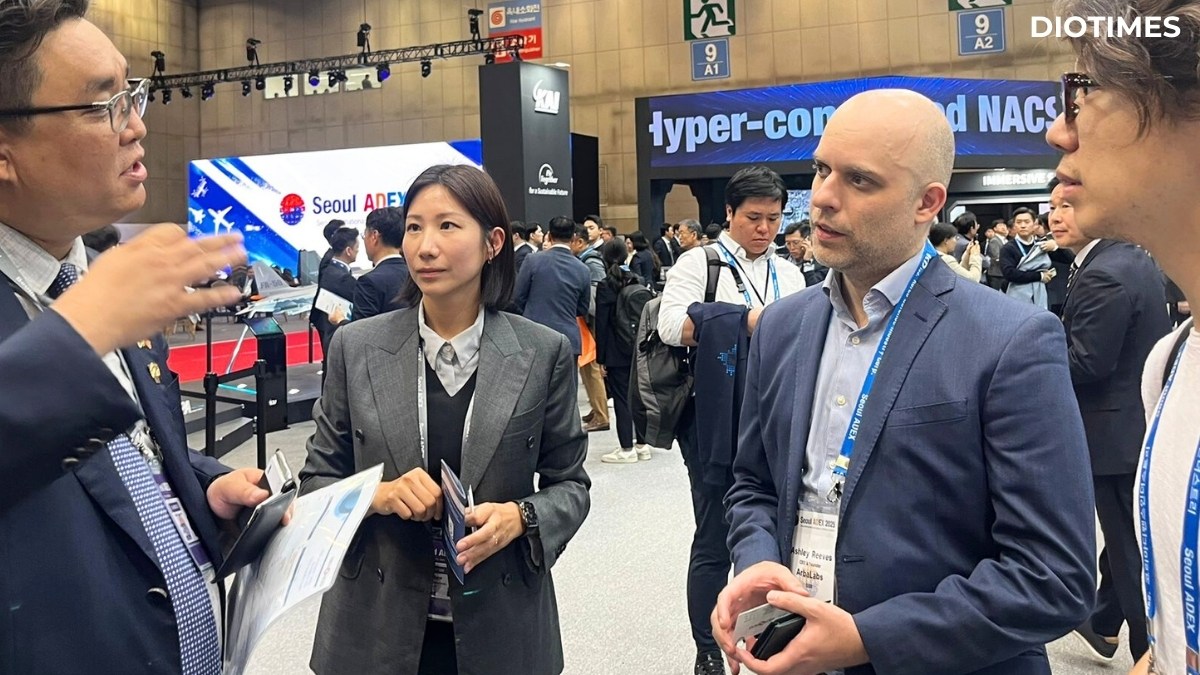

As AI moves beyond data centers and consumer apps into aircraft, vehicles, energy systems, and other safety-critical environments, “trust” can no longer be a slogan—it becomes an engineering requirement and a leadership responsibility. In this DIOTIMES Leaders interview, Ashley Reeves, Founder & CEO of ArbaLabs, describes why the next frontier of AI is verifiability: the ability to prove what an AI system did, where it ran, and whether its outputs can be trusted—especially when systems operate offline, autonomously, and under real-world constraints. He also explains why Korea and Asia are pivotal for building and validating this new layer of infrastructure, and what leadership must look like as regulation and accountability catch up with capability.

Please introduce yourself and summarize your career path. What was the key turning point that led you to focus on trust and integrity for Edge AI?

My career began in fintech and later moved into aerospace. For roughly eight years, I worked across Europe and Asia on systems operating under extreme constraints: limited connectivity, harsh environments, and near-zero tolerance for failure. Much of that work involved bridging European institutions with Asian partners, particularly in Korea, Japan, Taiwan, and the broader Southeast Asia region, often through government or publicly funded programs.

The turning point came when I saw AI moving into the same environments, not just data centers or consumer apps, but aircraft, vehicles, energy systems, and critical infrastructure. Yet the assumptions around trust were fundamentally different. In aerospace, trust is designed, tested, and verified. In much of AI, it was simply assumed.

That gap became impossible to ignore. ArbaLabs was founded to bring aerospace-grade verification, accountability, and responsibility to AI systems that are leaving the cloud and entering the real, offline world.

In one sentence, what is ArbaLabs? What problem do you solve?

ArbaLabs builds a decentralized, offline trust layer that enables organizations to verify the behavior of AI systems during and after deployment, especially in environments where connectivity, cloud oversight, or “blind trust” are not acceptable.

How do you define “verifiable” or “integrity” in practical terms for edge deployments? What does success look like for customers?

In practical terms, verifiable AI means organizations are no longer forced to rely on promises or assumptions once AI is deployed and operating outside continuous cloud visibility. They can independently confirm what an AI system did, where it ran, and whether its outputs can be trusted.

Success is not a conventional model metric. It looks like confidence during audits, clarity during incidents, and credibility with regulators and partners. And when something goes wrong, organizations have evidence instead of uncertainty.

What is your business model today, and how do customers typically adopt your solution?

We operate as an infrastructure company rather than a software tool provider. Customers typically engage with ArbaLabs when AI risk can no longer be managed through policy documents or internal controls alone. Because the offline AI market is still immature, we also see a significant first-mover opportunity.

Adoption often starts with a specific high-risk deployment: something offline, autonomous, or regulated. It then expands as organizations recognize that trust must be built into the system itself, not layered on afterward.

What are your core products or services, and what concrete outputs do customers receive?

We focus on sovereign hardware deployment and, rather than leading with features, we lead with outcomes. Customers gain the ability to treat AI systems as accountable machines rather than opaque black boxes.

Concretely, that includes tamper-evident records of AI activity, audit-ready evidence, and a clear separation between what an organization claims its AI does and what it can prove after deployment. That distinction will matter more as regulation matures.

Which industries and use cases are your primary focus right now? Can you describe one representative scenario?

We focus on regulated and safety-critical commercial environments: aerospace, industrial automation, energy systems, and emerging autonomous platforms.

A representative scenario is an AI system operating offline in the field. Today, if an incident occurs, organizations often cannot reliably reconstruct what actually happened. With verifiable AI infrastructure in place, uncertainty is replaced by evidence. The conversation shifts from speculation or blame to accountability and learning.

Who is the buyer and decision-maker, and what drives purchase decisions?

Initial interest often comes from engineering or security teams, but the decision is ultimately driven by leadership: executives, compliance owners, and risk committees.

What drives the decision is not performance optimization, but exposure: regulatory risk, reputational risk, and the long-term consequences of deploying AI systems that cannot explain or defend their behavior.

What does the partner ecosystem look like for ArbaLabs?

Because we build foundational infrastructure, partnerships are essential. We work alongside manufacturers, system integrators, research institutions, and public programs.

Our role is deliberately narrow and disciplined. ArbaLabs focuses on the trust and verification layer, while partners focus on domain-specific deployment, manufacturing, and scale. That clarity has been especially important in Asia, where ecosystems are deeply interconnected.

How do you view the competitive landscape? What differentiates ArbaLabs?

Many existing approaches focus on securing AI before deployment, during training or development. That work is important, but it typically assumes connectivity and centralized control.

Our differentiation is that we focus on what happens after deployment, when AI operates autonomously, offline, and under real-world constraints. That is where many assumptions break down and where regulation is now starting to catch up.

Why Korea or Asia? What do you aim to validate or achieve here?

My relationship with Korea predates ArbaLabs. I have worked with Korean partners and institutions for years, and what stands out is Korea’s ability to take complex, abstract ideas and turn them into real, reliable systems.

For ArbaLabs, Asia, and Korea in particular, is where infrastructure can be built and validated. Europe, by contrast, is exceptionally strong in regulation, software integration, and system-level adoption. The two regions are complementary, not competitive.

This alignment is increasingly visible at the policy level as well. The EU and Korea are moving in similar directions on AI governance, emphasizing accountability, traceability, and verifiable behavior. Our participation in the K-Startup Grand Challenge, where we placed in the top five and finished fourth overall, reinforced that Korea understands the long-term importance of this shift.

Looking ahead 12–24 months, what are your top priorities and milestones? What does leadership mean to you in the AI era?

Our priorities are focus and discipline: refining our core infrastructure, validating it through real-world deployments, and aligning it with emerging regulatory frameworks such as the EU AI Act and Korea’s AI legislation.

For me, leadership in the AI era means taking responsibility for consequences, not just capabilities. As systems become more autonomous, human judgment becomes more important, not less. Leaders must decide where trust is earned, how it is proven, and when technology should be constrained rather than accelerated.